Guide

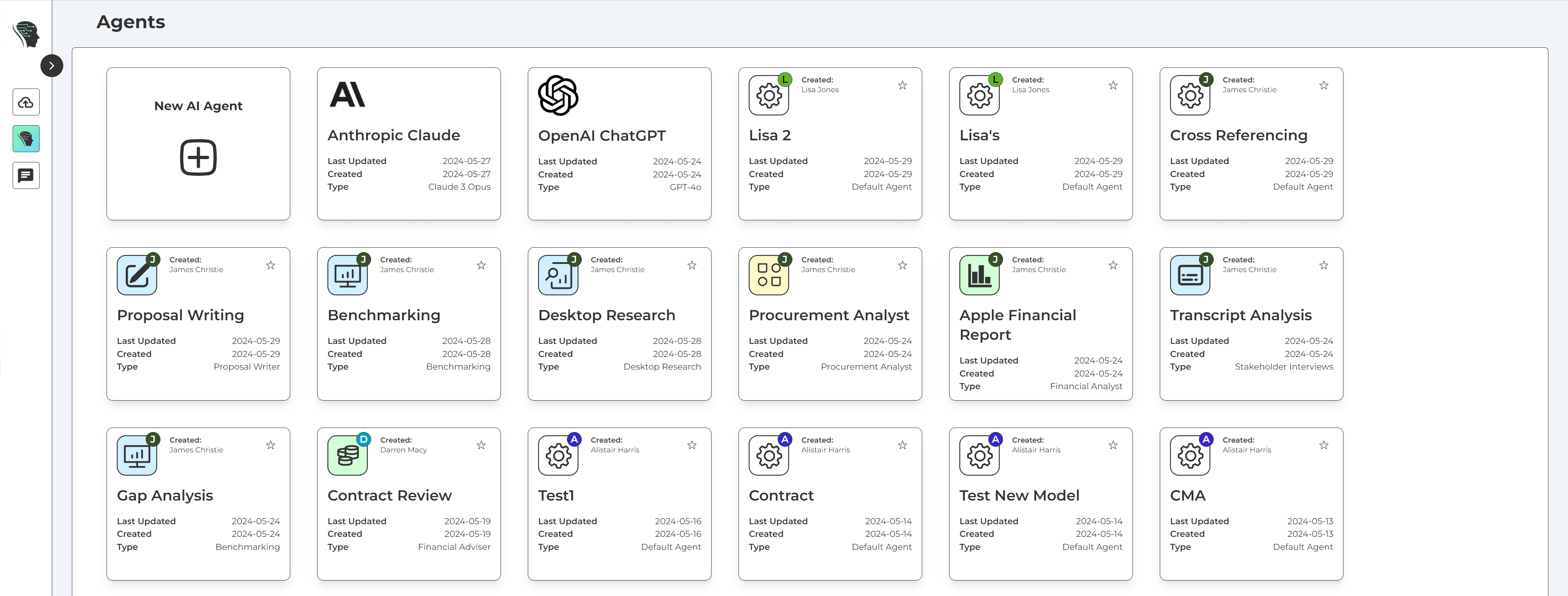

AI Agents

Setting up your AI Agents

AI Agents are at the heart of the Echobase Platform. They allow users to create agents trained to accurately produce and analyze material using artificial intelligence.

To create an Agent select “New Agent” from the Dashboard screen. (This is best done after you have uploaded files) Click here for more information on File Management.

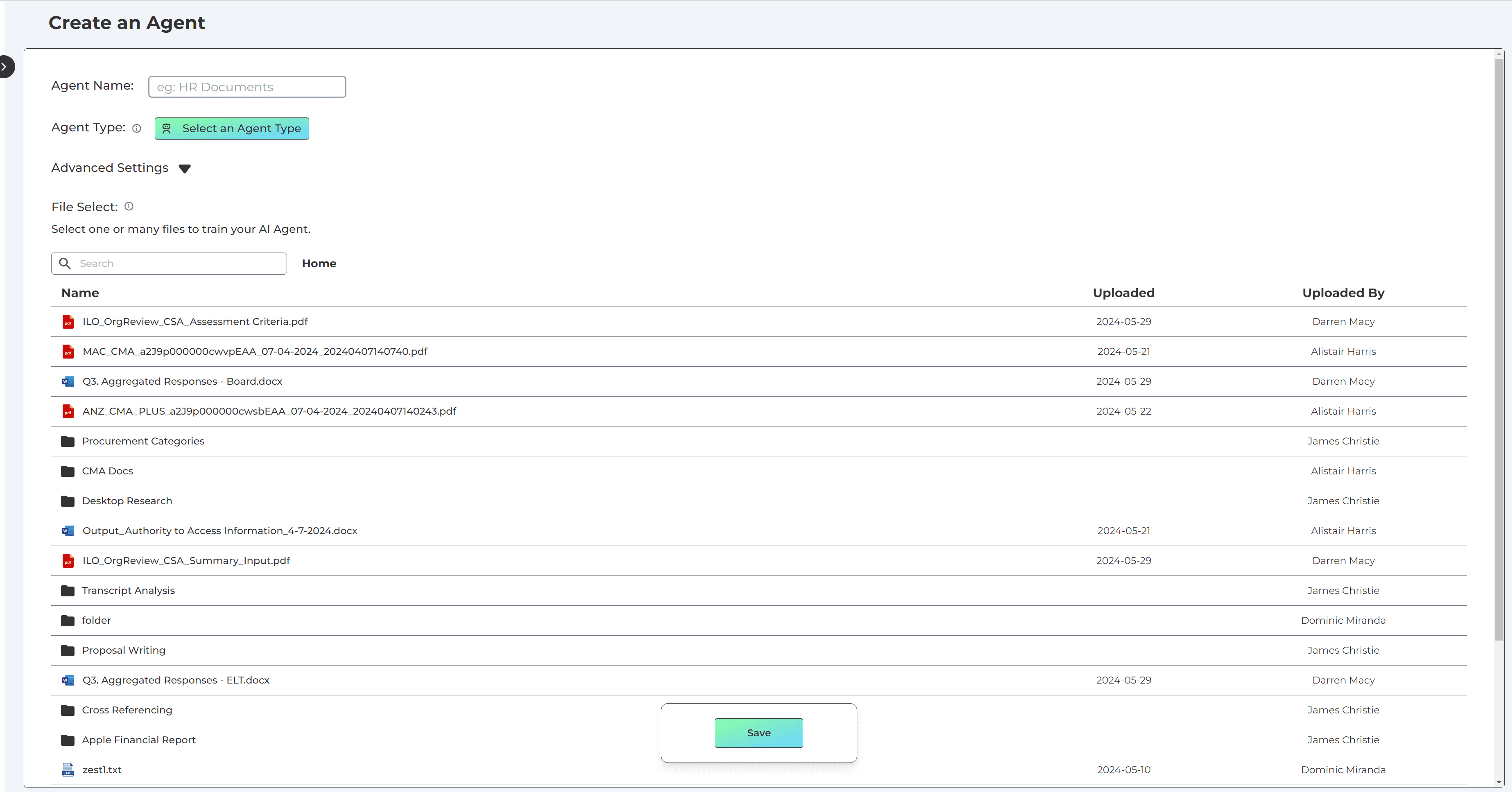

This will bring up an interface that lets you select different options and enable specific features for your agent.

Models

Echobase enables you to access the best and latest AI models. We believe that there will be no ‘one ruling’ model, and that each model will have specific uses with pros and cons.

GPT-4o: GPT-4 is currently the best available model in terms of pure output quality. GPT-4o has a context window of 128k and outperforms every other AI model for speed and accuracy.

Claude Opus: Claude Opus is the most powerful AI model created by Anthropic. It has an incredible 200k context window and ranks second best in the currently available AI models.

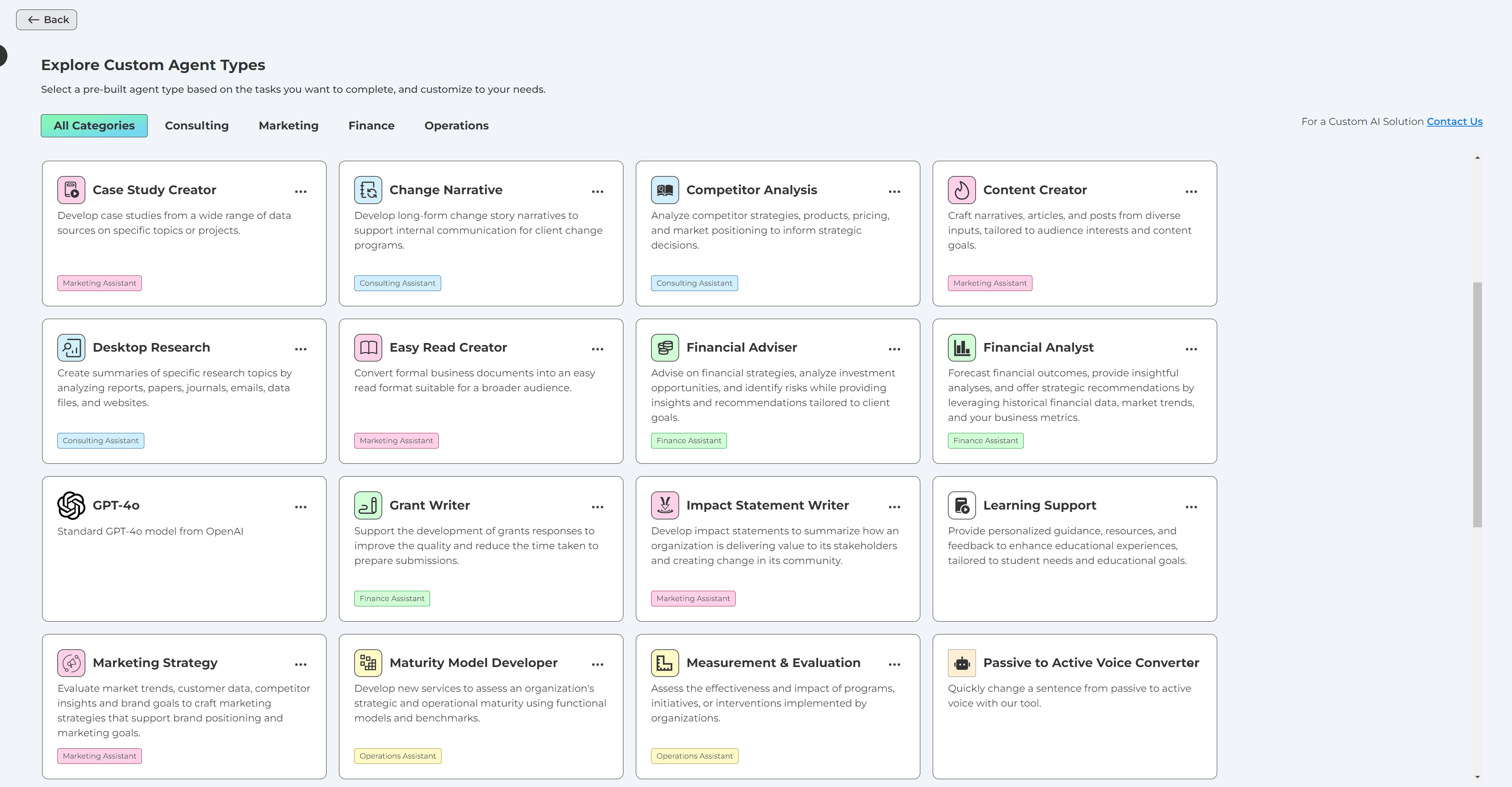

Types

Types are the premade agents that have been carefully constructed with settings to master a specific task. Select from 50+ pre-built agents for categories in consulting, operations, marketing and finance.

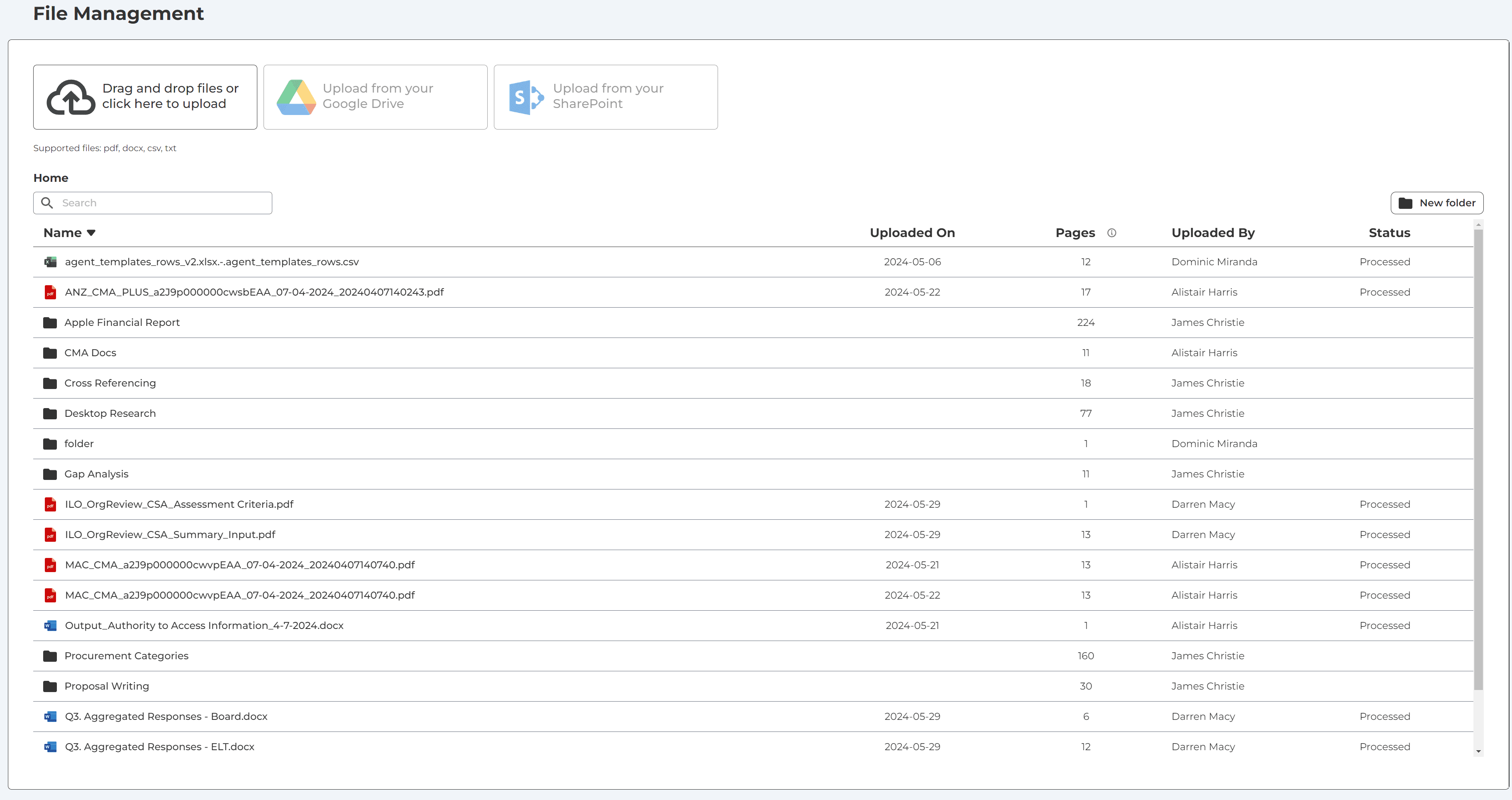

Files

Files serve as the foundational knowledge base for Functional AI Agents. It is imperative to input relevant information into these folders, as it equips the Agent with the capability to address queries accurately. Please choose from an existing dataset to integrate it with your agent.

Features

Features represent the advanced capabilities and enhancements you can incorporate into your Functional AI Agents. These encompass functionalities such as source attribution, recommendations for subsequent inquiries, and voice-to-text conversion.

Show Sources: The "Show Sources" feature facilitates the transparency of where and in which document the response output is coming from.

Follow-up Prompts: Follow up prompts enables AI to create additional prompting options to guide you through the conversation and assist in prompt development.

Speech To Text: Speech to text allows you to use your microphone to input prompts in addition to typing queries.

Select your Features from the ‘Create an Agent’ screen.

Advanced Settings

Dive into the Advanced Settings to fine-tune and customize your experience to the nth degree. Designed for power users, system administrators, and those who demand granular control, these settings allow you to optimize the agent's functionalities according to your unique requirements.

Some of these settings can significantly affect the app's operation. If unsure about a particular option, consult the accompanying help documentation or reach out to our support team for guidance.

System Prompt: A system prompt refers to a message or a signal from the system that elicits or prompts a user response. It's essentially the system's way of initiating or guiding a conversation.

For example, when you interact with a voice assistant like Siri or Alexa, the moment you activate it, you might hear a sound or get a message like, "How can I help you?" That's a system prompt. It's inviting you, the user, to provide some input or command.

In the context of AI models, a system prompt could be the initial question or statement given to the model to generate a certain type of response.

Prompts can be designed to be open-ended, specific, suggestive, or even leading, depending on the kind of user response or behavior the system is trying to elicit. The design and phrasing of prompts can play a significant role in the quality and direction of the AI's response or the user's subsequent actions.

Temperature:

Temperature refers to a hyperparameter used during the text generation process.

A higher temperature (e.g., 0.8 or 1.0) makes the model's output more random. This means the model is more likely to produce diverse or unexpected responses, but they might sometimes be less coherent or focused.

A lower temperature (e.g., 0.2 or 0.1) makes the model's output more deterministic and focused. It will tend to stick to more common or expected responses, which can make the output more consistent, but potentially less creative or diverse.

By adjusting the temperature, one can fine-tune the trade-off between randomness and determinism in the model's responses. For many applications, it's useful to experiment with different temperature settings to find the balance that provides the desired output quality.